safely transferring reinforcement learning agents under adversarial attacks

Motivation

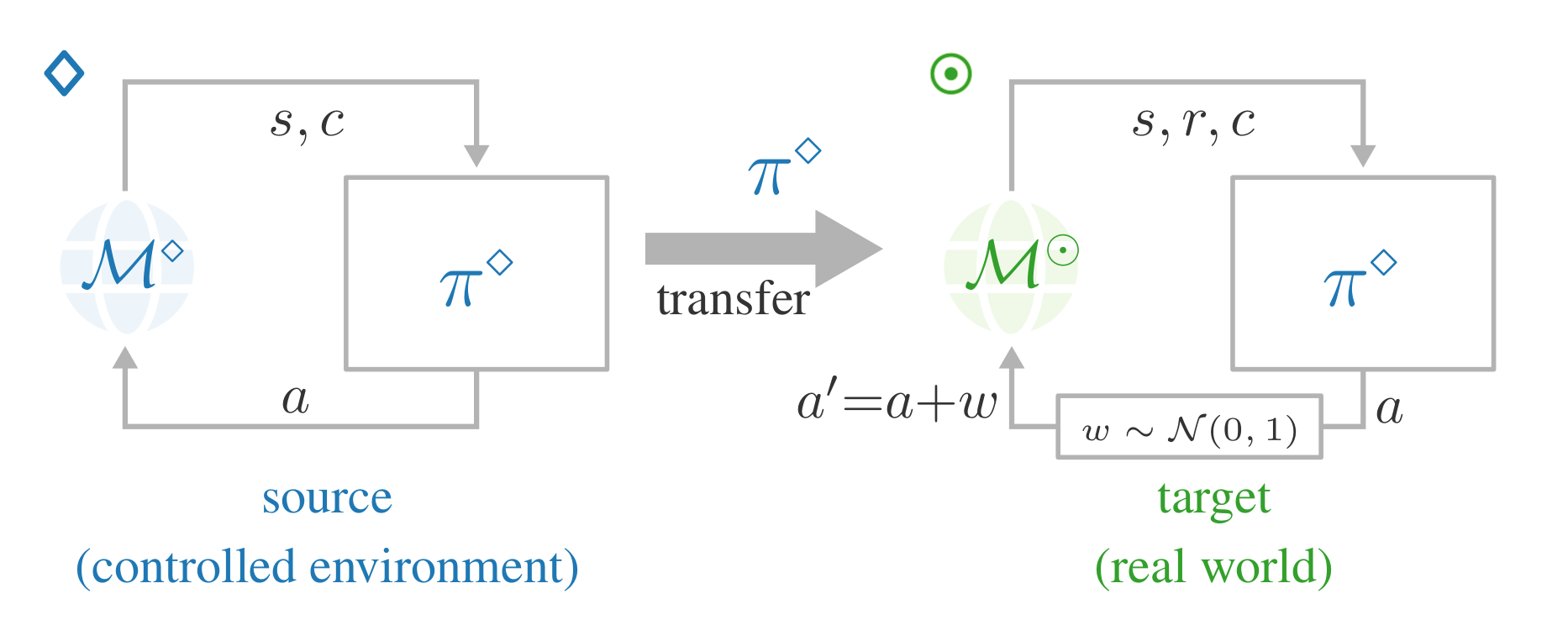

Safety is a paramount challenge for the deployment of autonomous agents. In particular, ensuring safety while an agent is still learning may require considerable prior knowledge (Carr et al., 2023; Simão et al., 2021). A workaround is to pre-train the agent in a similar environment, called the source task (⋄), where it can behave unsafely, and deploy it in the actual environment, called the target task (⊙), once it has learned how to act safely. Such situations are common when we train the agent on a simulator or laboratory before deploying it into the real world.

Challenge

Previous work has shown that it is possible to safely transfer an reinforcement learning (RL) agent from a source task that preserves the safety dynamics of the target task (Yang et al., 2022). However, this assumption may be too strong for real-world applications. Building high-fidelity simulators require extensive and meticulous engineering. Furthermore, small differences in the dynamics of the source and target tasks can be amplified as the agent interacts multiple times with the environment, leading to performance degradation in the target task.

Goal

This project investigates how to transfer an agent from a source task to a different target task while maintaining the same safety guarantees. In other words, this project investigates how to robustly perform safe transfers.

Overview

A potential approach is to train the agent in the source task under adversarial attacks to increase the robustness of the transfer. The project builds on the results from a previous ELLIS fellowship project (Hogewind et al., 2023) and the resulting codebase (https://github.com/LAVA-LAB/safe-slac).

The main tasks are:

-

Literature review and formulation of the problem statement.

Literature review and formulation of the problem statement. -

Training Safe RL agents, such as SAC-Lagrangian.

Training Safe RL agents, such as SAC-Lagrangian. -

Implement new methods to improve the robustness of the safe RL agents after the transfer.

Implement new methods to improve the robustness of the safe RL agents after the transfer. -

Designing experiments to evaluate the safety of the transfer.

Designing experiments to evaluate the safety of the transfer.

Learn more

Access http://lava-lab.org/projects/safetransfer/ for more details.

References

- Carr, S., Jansen, N., Junges, S., & Topcu, U. (2023). Safe Reinforcement Learning via Shielding under Partial Observability. AAAI.

- Simão, T. D., Jansen, N., & Spaan, M. T. J. (2021). AlwaysSafe: Reinforcement Learning without Safety Constraint Violations during Training. AAMAS, 1226–1235.

- Yang, Q., Simão, T. D., Jansen, N., Tindemans, S. H., & Spaan, M. T. J. (2022). Training and transferring safe policies in reinforcement learning. AAMAS 2022 Workshop on Adaptive Learning Agents.

- Hogewind, Y., Simão, T. D., Kachman, T., & Jansen, N. (2023). Safe Reinforcement Learning From Pixels Using a Stochastic Latent Representation. ICLR.